Home »

NLP Tutorial

The History of NLP: From Early Ideas to Modern Innovations

In this chapter, we'll journey through the History of NLP, tracing its evolution from simple rule-based systems to today's AI-powered language models.

Early Days of NLP: Rule-Based Systems (1950s-1960s)

The roots of NLP began with bold ambitions to teach machines how to understand human language using explicit rules (pre-defined step-by-step instructions) and formal grammars (structured rules that describe the syntax of languages).

Key Milestones

- 1950: Alan Turing proposed the famous Turing Test, questioning if machines could "think" like humans. (Turing Test: It is a method which determines a machine's capability to exhibit intelligent behavior to an extent that it is indistinguishable from human behavior.)

- 1954: The Georgetown-IBM experiment successfully translated 60 Russian sentences into English—marking the first machine translation.

- Development of formal grammar theories and basic parsing algorithms (methods for analyzing sentence structure).

Practical Example

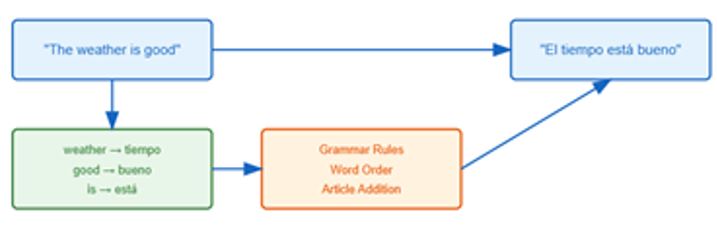

Imagine translating "The weather is good" into Spanish:

- The system identifies words like "weather" and "good."

- Rule-based dictionaries (word-to-word translation systems) map each word to its Spanish equivalent.

- Grammar rules then arrange the words to form: "El tiempo está bueno."

However, this approach was time-intensive (required a lot of time) and couldn't scale to handle language complexities like idioms (expressions with meanings different from literal words) or context (the situation or setting that affects meaning).

Statistical Revolution (1970s-1980s)

By the 1970s, NLP entered a new era as researchers realized that rigid rules couldn't fully capture human language's variability. This led to the rise of probabilistic models (methods based on likelihood or probabilities).

Major Developments

- The introduction of n-gram models (techniques to predict the next word in a sequence based on the previous words).

- ELIZA, the first chatbot, mimicked human conversation (though limited to simple patterns).

- Syntax trees became tools for parsing sentences (breaking down sentences into components like subjects and verbs).

Real-World Impact

Early statistical models paved the way for innovations like basic speech recognition and text prediction. For instance, email spam detection started relying on word frequencies to identify spam messages.

The Machine Learning Era (1990s)

The 1990s marked a pivotal (critically important) transition to machine learning-based NLP, allowing systems to learn from data rather than relying on hand-crafted rules.

Key Advances

- Hidden Markov Models (HMMs) enabled more accurate speech recognition. (HMMs: Statistical models that assume hidden states to predict sequences, commonly used in language and speech.)

- The creation of the Penn Treebank, a vast dataset for training NLP models. (Treebank: A parsed text corpus with labeled structures.)

- Emergence of statistical machine translation, reducing dependency on bilingual dictionaries.

- Named Entity Recognition (NER), enabling machines to identify entities like names, dates, and locations. (NER: A method for extracting specific real-world names from text, such as "Google" or "New York.")

Example in Action

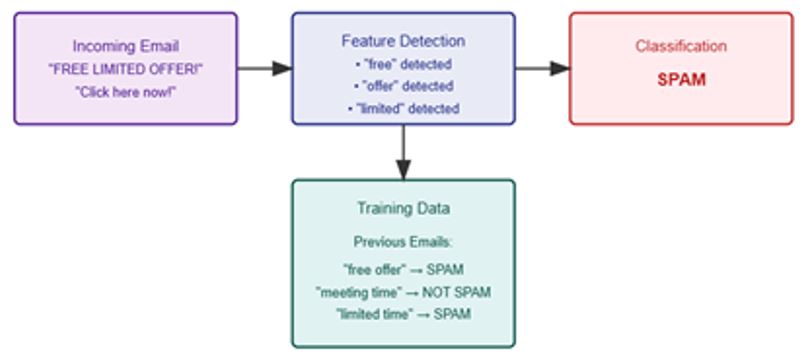

Think of classifying emails as spam or non-spam:

- Features: Words like "free," "offer," or "limited time."

- Labels: Marked as either SPAM or NON-SPAM.

- The system learns patterns from thousands of emails to make future predictions.

Deep Learning Revolution (2010s-Present)

The last decade has witnessed an explosion of innovation in NLP, driven by deep learning (a subset of machine learning using neural networks with many layers).

Breakthrough Technologies

- Word Embeddings: Techniques like Word2Vec and GloVe map words to numerical vectors, capturing their meaning. (Word Embeddings: Representing words in vector spaces where similar words are closer together.)

- Transformers and Attention Mechanisms: Enabled contextual understanding through models like BERT and GPT. (Transformers: A type of deep learning model designed for sequential data like text. Attention: A mechanism allowing models to focus on relevant parts of a sentence.)

- NLP applications now include real-time translations, human-like chatbots, and text summarization.

Modern Example

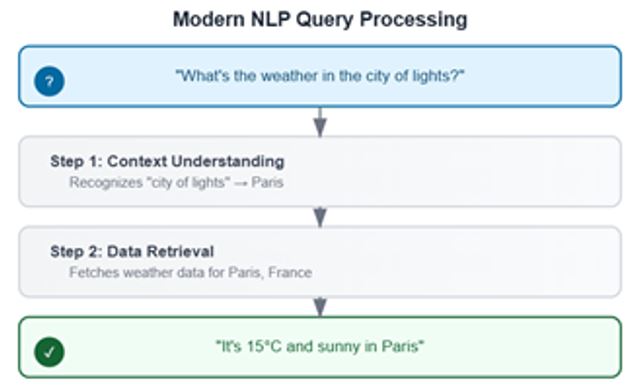

When you ask a virtual assistant, "What's the weather in the city of lights?" it:

- Recognizes that "city of lights" refers to Paris (context understanding).

- Fetches weather data for Paris, France.

- Responds with: "It's 15°C and sunny in Paris."

Challenges and Future Directions

Current Challenges

- Contextual Understanding: Handling idioms and ambiguous phrases. (Ambiguity: When a sentence can have multiple meanings.)

- Bias in AI: Reducing prejudice inherent in training datasets. (Bias: A tendency for AI models to favor certain groups unfairly due to flawed training data.)

- Resource Efficiency: Managing the computational demands of large language models.

Future Innovations

- Multimodal NLP: Integrating text with images or audio for richer interaction. (Multimodal: Combining multiple forms of data like visuals and text.)

- Few-Shot Learning: Enabling NLP models to learn tasks with minimal data.

- Cross-Lingual Understanding: Seamlessly connecting languages in global systems.

Conclusion

From its humble beginnings to its current role as a driver of technological innovation, Natural Language Processing has come a long way. By blending rule-based logic, statistical models, and deep learning, NLP has transformed how we interact with machines. As the field continues to evolve, we stand on the cusp (edge or brink) of even greater breakthroughs.

Advertisement

Advertisement